ANSYS RSM Cluster (ARC) Job Submission from Rescale Desktops

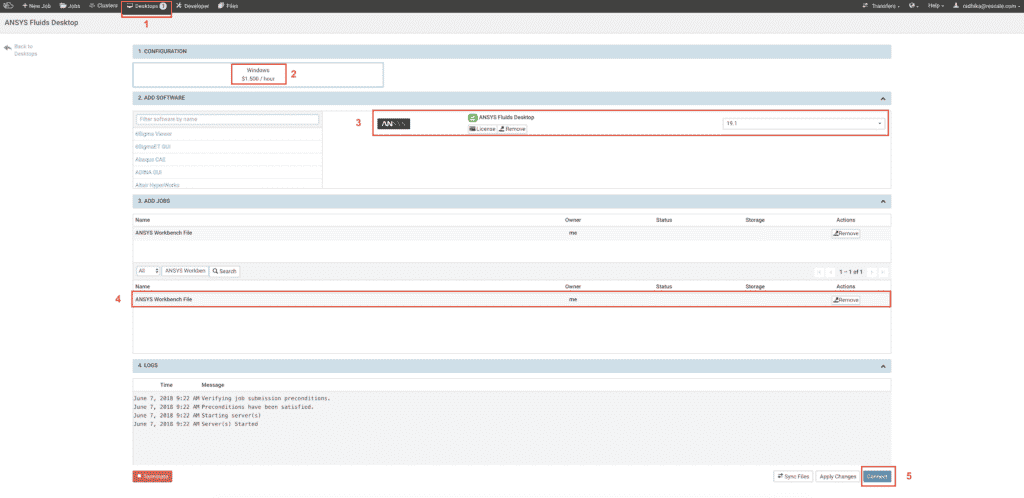

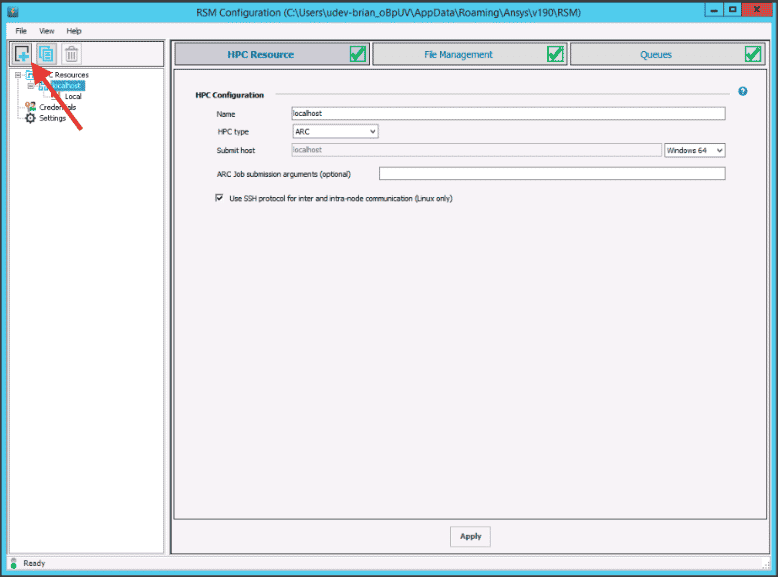

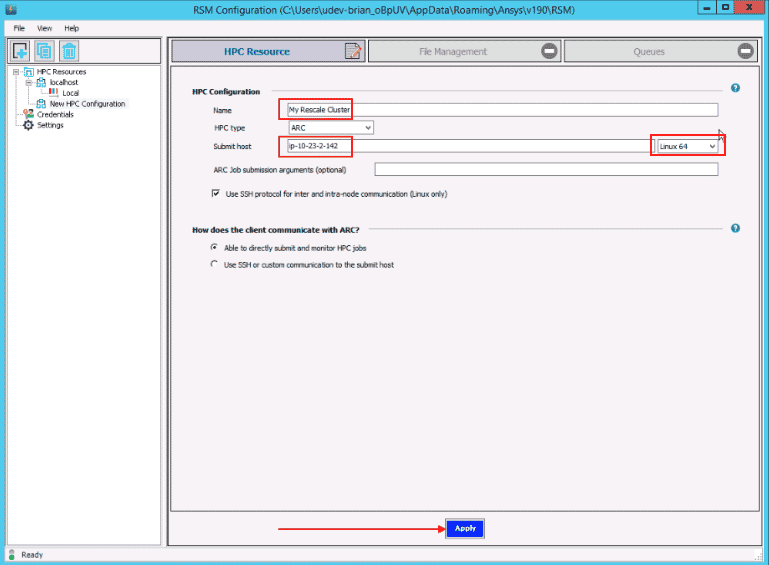

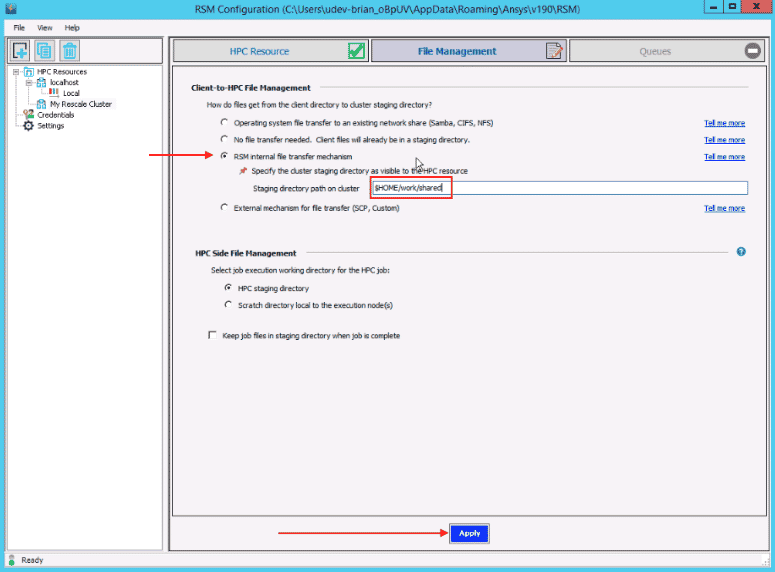

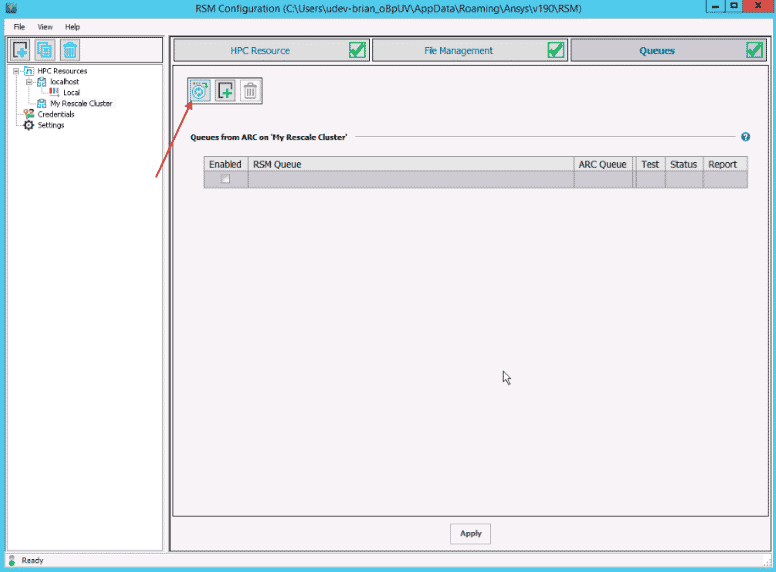

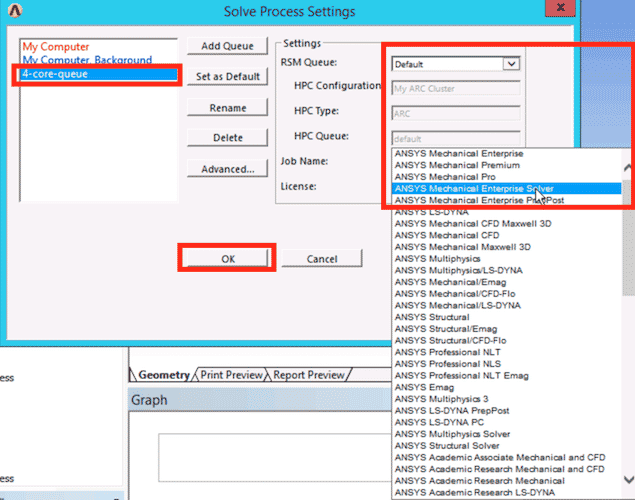

This tutorial will outline how to set up a Rescale ARC clusterA computing cluster consists of a set of loosely or tightly ... More running the ANSYS RSM Cluster scheduler. This will allow job submissions from Rescale desktops to a Linux compute nodes.

Limitations

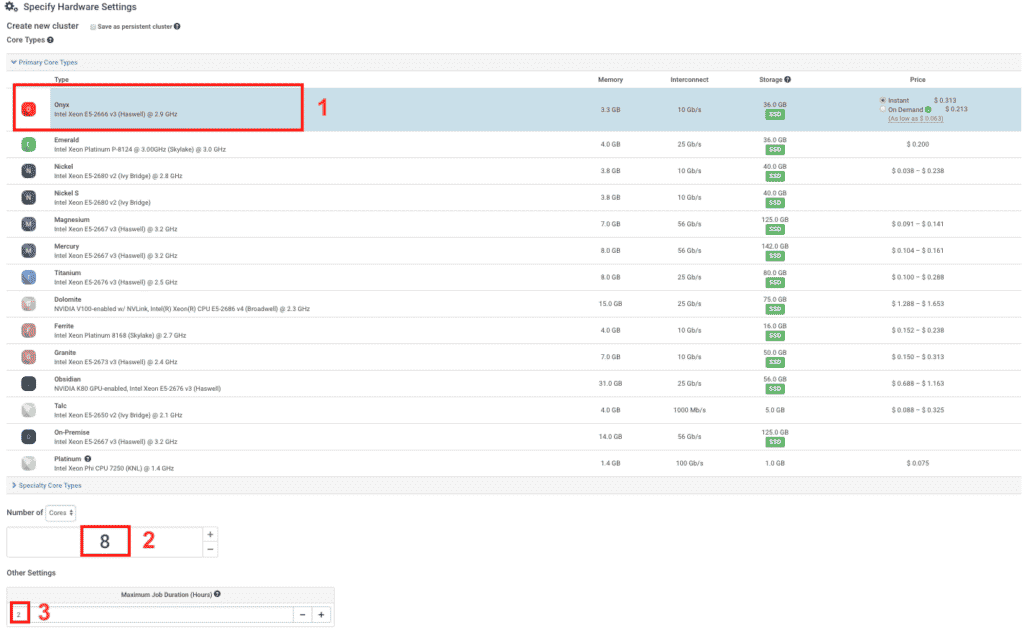

- Due to cloud provider compatibility, ARC is available only on certain corean individual processing unit within a multicore processor o... More types

- ARC will not automatically shut down the provisioned Linux compute cluster. As a result, the user will have to manually terminate the cluster. To be safe, a wall time should be set on the provisioned compute cluster

- ARC transmits input and output data over the network. Jobs with large input or output may run longer than expected due to the time required to transfer files

- ARC is Supported on versions 19.0 and higher

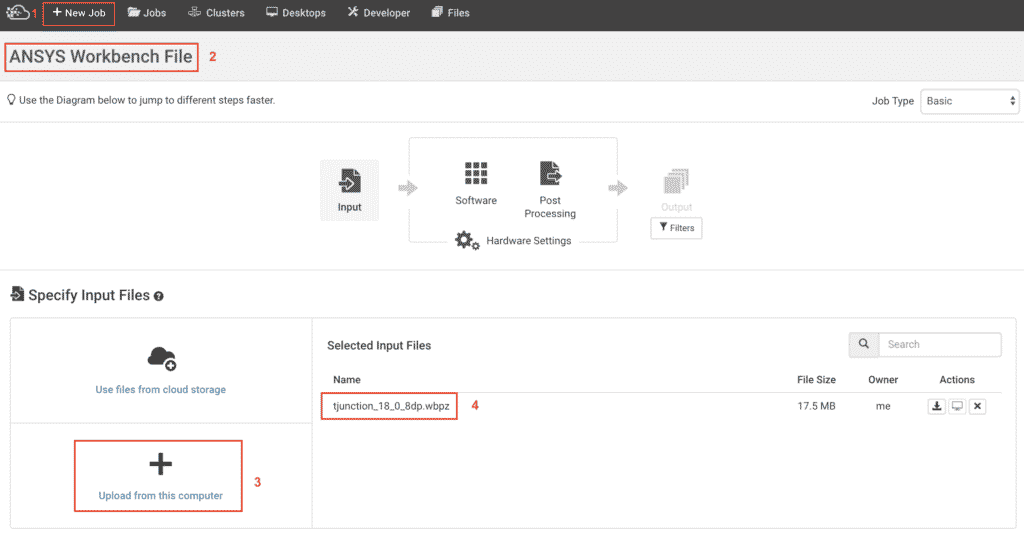

This tutorial presents an example using ANSYS Fluent Workbench Project. To obtain the Workbench project file (.wbpz) for the tutorial, please click on the Import Workbench Project button below and click on the Save option located on the top right corner of the job submission page to have a copy of this file in your Rescale cloud files.

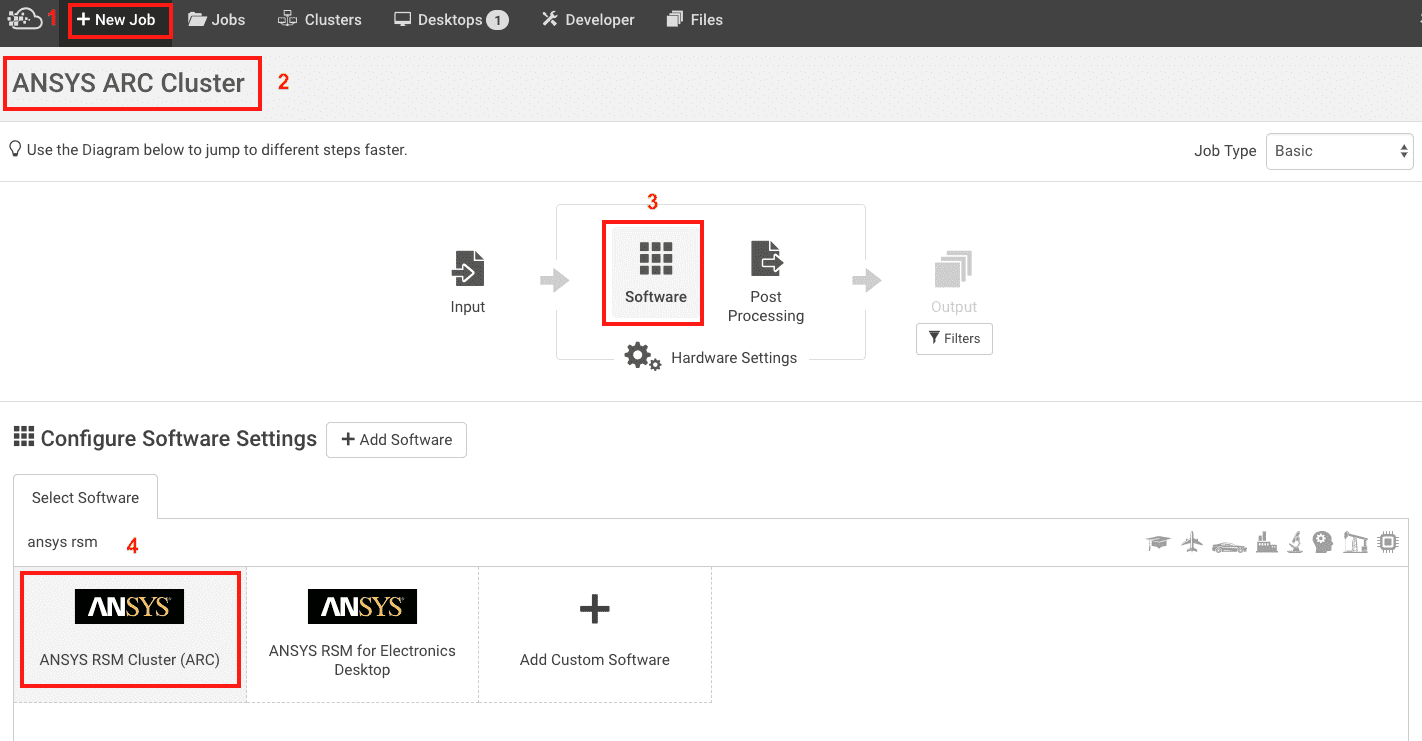

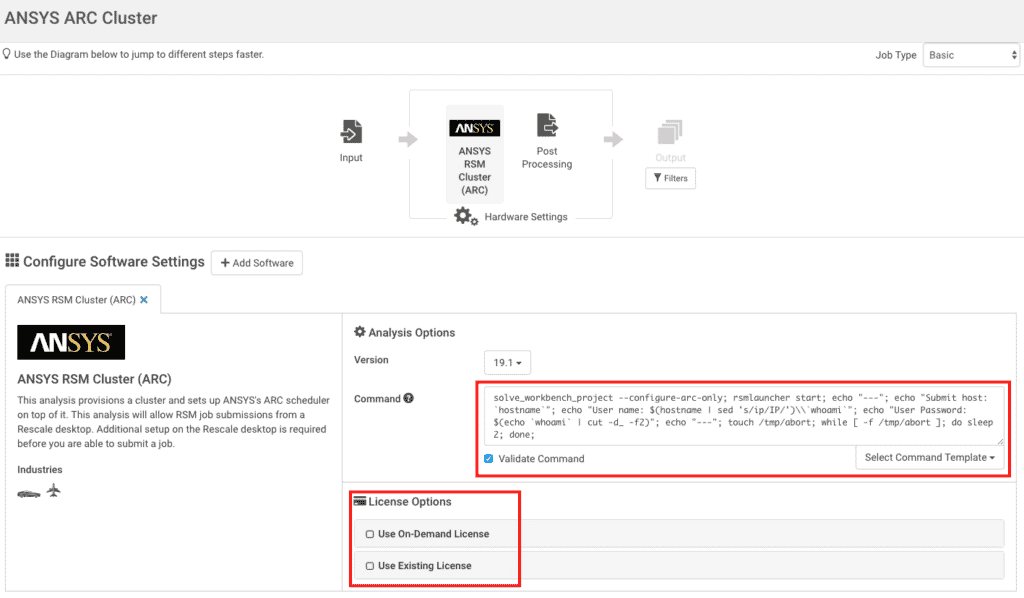

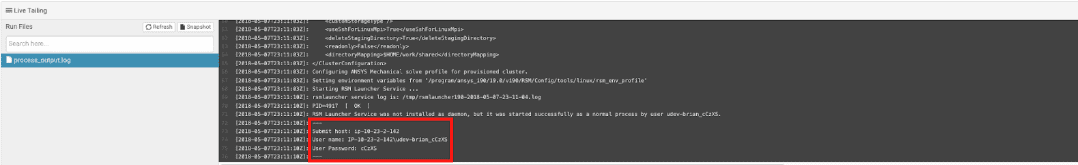

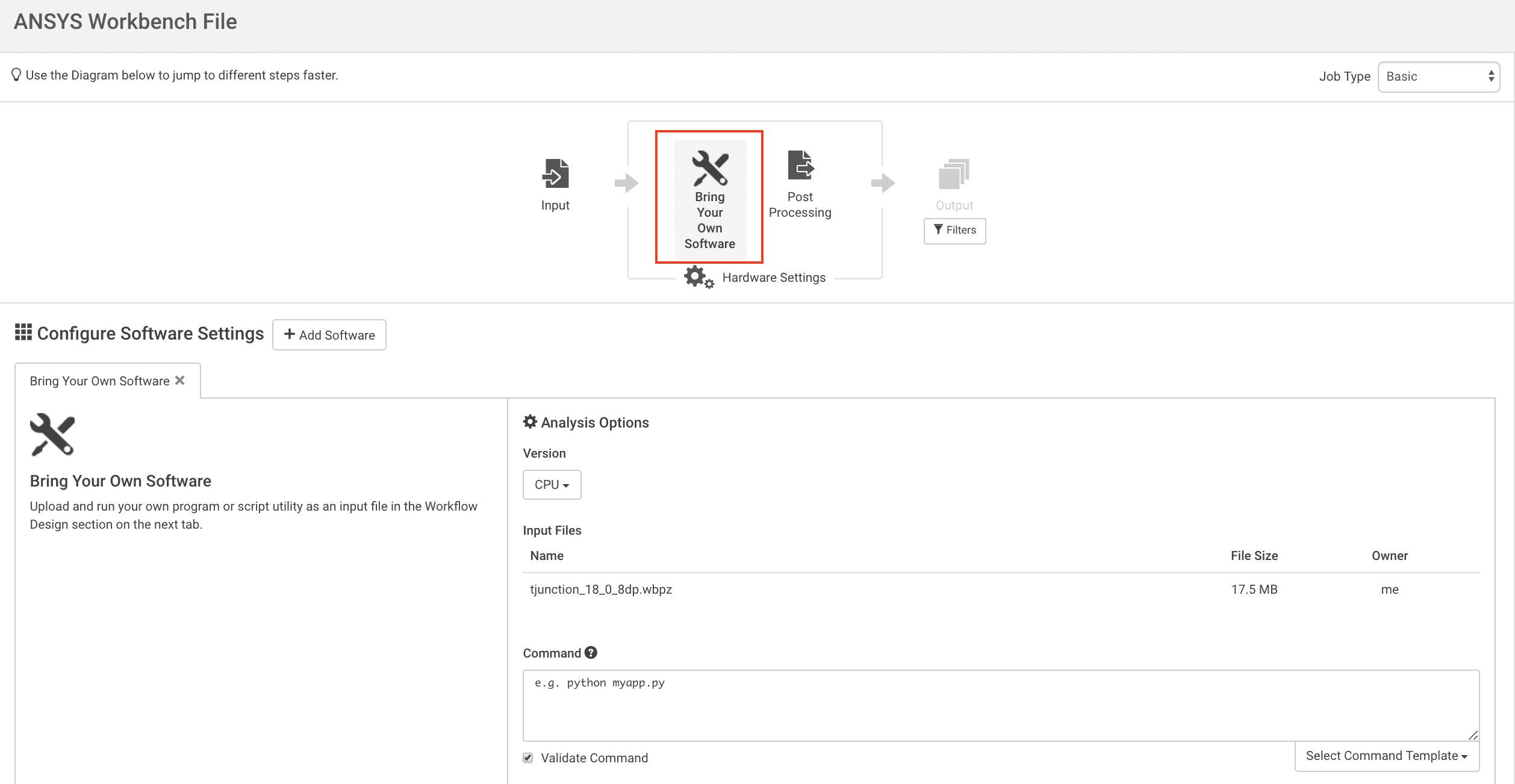

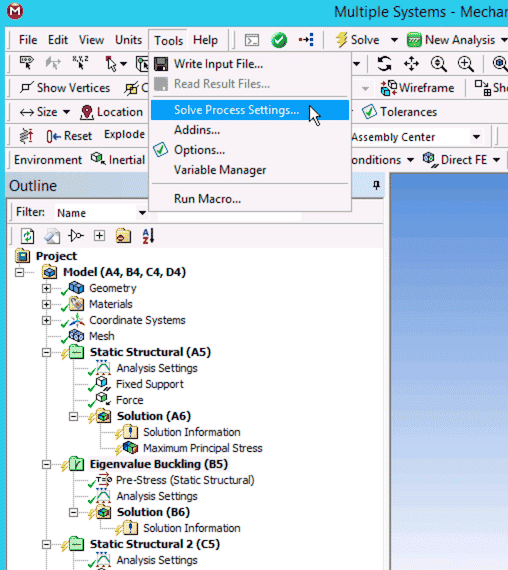

The steps are as follows