Four Ways to Digitally Transform with HPC in the Cloud

The cloud revolution has begun, and High Performance Computing (HPC) is next. As cloud computing rapidly becomes better, faster, and cheaper than on-premises, no workload will be left untouched, and companies will need to adopt it to remain competitive over the next decade and beyond.

So what is the cloud transformation in HPC? Why are on-premises HPC systems not enough anymore?

LESSON ONE: Cloud computing drives agility, faster innovation, and just-in-time procurement

Cloud computing is the best way to rapidly deliver new compute power to meet demand. For companies to stay competitive, they must embrace broad technology trends and innovation.

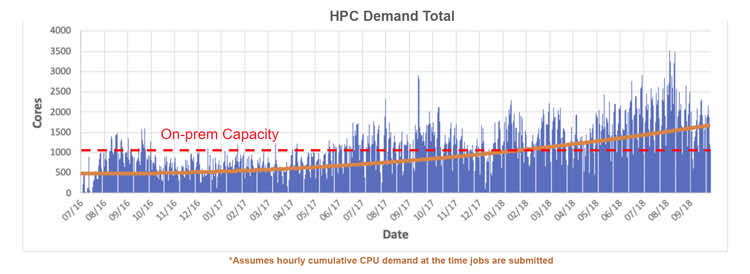

Usage of computation is accelerating as companies penetrate new markets and personalize the consumer experience while also balancing stricter regulation. Figure 1 is showing a threefold increase in compute demand over three years for one application alone at a Top 5 automotive supplier. The peak demand reached double the capacity available on-premise in the last year. To date, buying on-premises compute has been the answer, but this is rapidly changing.

First, best-in-class companies embrace accelerating innovation by unlocking how rapidly new users can access capacity. Rather than strictly restricting or prioritizing access to on-premises resources based on limited capacity, cloud scale and availability make it possible to open HPC to new audiences and scenarios. Engineers and scientists are able to run higher fidelity analysis and more simulations, reducing defects or warranty issues and winning more contracts. Timely access to compute would also enable engineers to explore new ways of innovation. Engineering departments must quickly adopt new methods, tools, and technology, and IT needs to empower them rather than limit them.

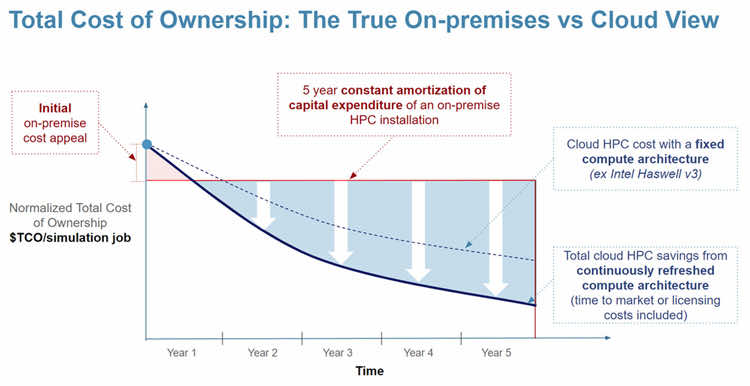

Second, cloud capacity can be deployed faster and with more agility than on-premises compute. Legacy provisioning purchases on-premise hardware as a depreciated capital expense, restricted by how long it takes to secure capital in tight markets. This also creates risk that hardware needs stay static over the lifetime of the hardware: five years is an infinite time period in today’s evolving competitive marketplace. In contrast, leading-edge organizations have embraced the cloud to drive business transformation. By transforming Capex to Opex, companies can rapidly shift procurement and learning cycles to weeks instead of years. As illustrated in figure 2, shifting to paying cloud computing expenses per job rapidly pays off in a short period of time. By continually refreshing compute architecture and riding innovation curves in hardware performance gains, organizations like this can avoid long and costly RFPs and minimize risk by enabling rapid iteration.

Cloud-based HPC is a best practice to deliver new value to the business unit in a span of weeks instead of lagging by years, scaling on demand just when the business needs it.

LESSON TWO: Cloud HPC data is shared more easily with mainstream business workflows

Companies need to better understand the possibilities to innovate. A key objective of the digitization effort is to harvest data to more quickly surface information that matters to different stakeholders. In contrast with legacy on-premises HPC systems that may be disconnected from other business practices, cloud HPC data and metadata are shared more easily. By missing this trend, IT organizations miss the opportunity to capture precious engineering information (e.g model size, procedure, project, and key results). With access to data from HPC workloads, other IT administrators and engineering leaders can make more informed decisions and continuously take action that drive efficiencies. For example, this allows team to apply techniques like artificial intelligence to HPC metadata so they can be fueled with more automation and optimization. A user could, for instance, quickly retrieve a past job with similar attributes, therefore eliminating analysis rework. It is also essential to capture HPC information in relation to the application or even the model used to put the data in its full context. This historic HPC workload data could then generate recommendations for which compute architecture offers the best cost-performance based on how models are sized or which application are used.

LESSON THREE: Full stack cloud HPC platform provides a fully extensible and traceable experience

The managed cloud experience can provide a fully engineered experience that can integrate with the entire enterprise stack. Traceability is essential for company to measure trade off and manage uncertainty. Since every modeled behavior is built in differing systems, HPC applications and underlying hardware environments are diverse and fragmented. This makes it hard for IT and engineering teams to drive a consistent end-to-end traceability across the entire engineering or scientific process and linking their disparate tools together. Moreover, an engineer or scientist often uses more than one application in their toolchain to perform modeling, analysis, data regression, and post-processing visualization. With traditional HPC, those processes are captured through complex submission scripts often written by the user, which makes it difficult to maintain and update consistently, and connect to the rest of the enterprise over time. With cloud HPC, each step of the process are tied to a specific hardware and software stack optimized for best speed up. Cloud tiered storage also tailors storage to how the process flow is executed to optimize performance. This approach allows company to move up a level of abstraction, by formulating HPC workloads to meet the underlying hardware capabilities while reducing considerably the amount of manual scripting required. In addition, cloud HPC has built-in APIs and other services to extend to internal resources such as PLM or other cloud application such as IOT platforms. It brings together new and legacy technologies to enable agile change management while maintaining optionality to integrate future technology.

LESSON FOUR: Cloud computing enables ‘as a service’ economy for HPC

HPC enables a robust partner ecosystem for co-innovation. Just as the cloud has unlocked n-squared value networks in social media, transportation, media and supply chains, cloud HPC will fundamentally change collaboration. Today’s organizations have engineering centers and customers distributed across the world and the complexity of the supply chain is increasing. HPC has to facilitate teamwork at different locations, bring compute resources close to each user and overcome the challenges of data fragmentation and data gravity.

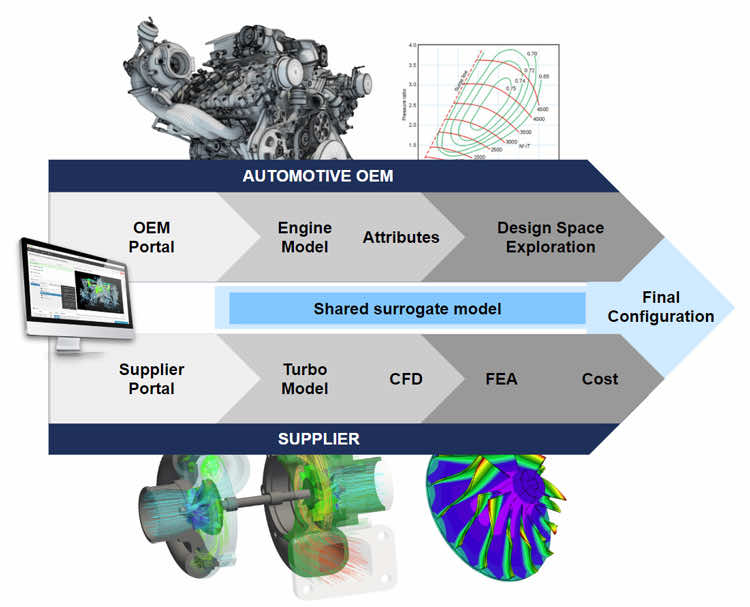

Digital transformation is driven by the power of the partner ecosystem. IDC predicts that “by 2021, 82% of revenues from digital transformation business models will be ecosystem enabled.” On-premise HPC architectures are more isolated systems and are not as well designed to enable efficient information exchange across external collaborators. In contrast, cloud was built for sharing and collaborating across organizational boundaries. Cloud computing infrastructure unlocks new possibilities for HPC use cases with which an on-premise system cannot keep pace. For example, manufacturing companies are now adopting a model-centric approach of engineering called Model-Based System Engineering (MBSE) in an effort to increase focus in managing and connecting the digital thread. The system-level model becomes the single source of truth to perform rapid trade of study. It is connected to component behavior models that might come from internal departments, but also external suppliers. Cloud computing provides the abstraction layer to enable the computation of the MBSE toolchain while protecting the IPs between the OEM and suppliers (see figure 3).

This method enables OEM to iterate much faster on the design across the entire supply chain. This is also a first step toward building a digital twin to tap into new revenue sources and offer new experiences to customers. The digital twin enriches the MBSE model with data from an external ecosystem such as sensors placed on physical assets. That opens opportunities for in-service predictive maintenance and other insights. The openness and decentralization of HPC architecture are key to evolving toward the ‘as a service’ economy and offer a better customer and partnership experience.

How to get started?

The first step toward the digital journey is to craft a clear vision center around productivity gains and new revenue sources that HPC in the cloud can enable. What area in your business could benefit from more computational speed, extensibility and collaboration?

Change management should also not be overlooked. HPC administrators need to consider the migration path to the cloud and its governance.

Rescale streamlines the HPC transition to the cloud with its ScaleX platform: a modern SaaS framework that scales to enterprise levels and supports thousands of simultaneous users and compute intensive workloads. Together with our pool of experts that strive to make engineers and scientists more productive in the cloud, Rescale helps organizations accelerate their digital maturity.