Pitfalls of the Headless HPC Environment

Introduction

When running HPC on Rescale, or in any traditional HPC environment for that matter, it is essential that the solver can be run in a headless environment. What that means in practice is that ISVs have made sure that their solvers can be run in batch mode using simple (or not so simple) command-line instructions. This allows users of their software to submit jobs to a headless HPC environment. Traditionally, this environment is a set of servers sitting behind a scheduler. The engineer or scientist would write a script of command-line instructions to be submitted to the scheduler. The same is true on Rescale. The user enters a set of command-line instructions to run their jobs on Rescale’s platform.

Let’s take OpenFOAM, for example. An OpenFOAM user will usually write a Allrun script, and invoke it on Rescale by simply calling the script:

./Allrun

This is easy and applies to other solvers available on Rescale: LS-Dyna, CONVERGE, Star-CCM+, NX Nastran, XFlow and many more. All solvers on Rescale are instantiated using a simple command-line instruction.

The Headless Environment

Being able to run a solver using a command-line instruction does not mean the solver will run in batch. For example, trying to run Star-CCM+ without explicitly specifying to run the solver in batch mode would cause the program to launch its graphical user interface (GUI) and look for a display device, causing it to immediately exit. Star-CCM+ should, therefore, be called using a command-line instruction like:

starccm+ -power -np 4 -batch batch.java

This is simple enough. There is something to be said for being to run both the batch solver and GUI using the same program. Unfortunately, this type of implementation can be incomplete.

When ISVs decide to migrate their solver capabilities from the desktop environment to the HPC (batch) environment they usually do this because they have implemented the ability to run their solver over more than a single machine. A solver that can only run on a single machine provides less of a benefit when it’s run in an HPC environment. On initial iterations, ISVs may leave some latent artifacts of the original desktop implementation inside their batch solvers. Although these solvers can be executed from the command-line, they may still require access to a display. Since, on Rescale, we still want to be able to run these “almost-headless” solvers we make use of a tool called virtual frame buffers.

The X Virtual Frame Buffer

The X virtual frame buffer (Xvfb) renders a virtual display in memory, so applications that are not truly headless can use it to render graphical elements. At Rescale, we use the virtual frame buffers as a last resort because there is a performance penalty to launching and running them. The use of Xvfb requires us to implement a wrapper around these solver programs. In its simplest form, this can be implemented as follows:

#!/bin/bash

# Wrapper script located at /usr/local/bin/solver

_d=1

Xvfb :${_d} > /tmp/xvfb-${_d}.log 2>&1 &

_xvfb_pid=$!

export DISPLAY=:${_d}

/path/to/real/solver $@

kill ${_xvfb_pid}

This seems fairly simple. We launch a virtual frame buffer on a numbered display, tell our environment to use the display associated with the virtual frame buffer, launch our solver, and clean up at the end.

A Can Of Worms

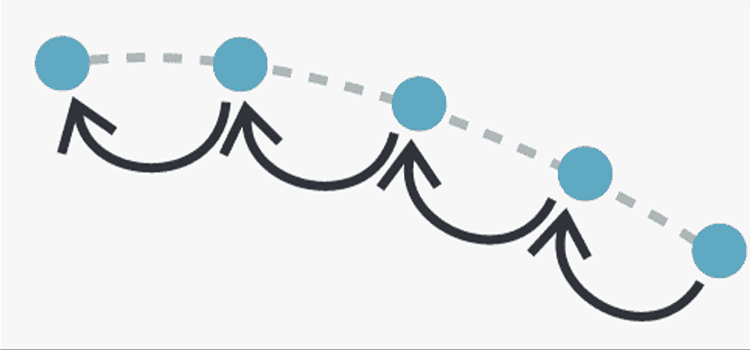

One very powerful feature on Rescale is the parameter sweep/design of experiment (DOE) functionality. We can run multiple runs of a DOE in parallel. This also means that multiple runs of the DOE can be run on the same server. Let’s imagine running the above script twice on the same node. Each instantiation of the script will now try to launch a frame buffer on the same display. This can lead to all sorts of problems. Race conditions, process corruption, and so on. Regardless of the low level issues this may cause, the biggest high-level issue is when the solver hangs due to issues with the virtual frame buffer. As it stands, a user who initiated a DOE with 100 runs may do so at the end of the day and let the job run overnight. The next morning that user may realize that one run has been hanging the entire night due to issues with Xvfb. He may still see 99 runs finishing in maybe a couple of hours, but the one hanging run has kept his cluster up for the entire night. This kind of situation is one that we want to avoid at all costs.

The implementation of a virtual frame buffer requires us to write all kinds of robustness provisions into our wrapper script. We may decide to only launch a single Xvfb on a single display and use that display for all of our solver instantiations. We can check whether Xvfb is running and, if it isn’t, skip the launching of the frame buffer step:

if [ -z “$(ps aux | grep Xvfb | grep :${_d})” ]; then Xvfb :${_d}; fi

This has the side effect that we never know when we can shut down the frame buffer, requiring us to leave it up at all times. This may sometimes be okay depending on the requirements of the solver. If it’s not okay, we would have to increment the display number for each solver process, and clean up each frame buffer when each solver finishes.

We can also explicitly check whether a solver is hanging. We can launch the solver in the background and interrogate its pid for the status of the program using a foreground polling loop. We can write a retry loop around the solver instantiation to restart the solver if it fails the first time. This may be the case if the frame buffer is still initializing while we are calling the solver.

The Case of the Invalid License

One of the CFD solvers we support requires a frame buffer. A customer launched a simple job without specifying a valid license address. Two days later he was wondering whether his job was still running. It turned out that the solver had hung within seconds of being instantiated and had been sitting idle for 2 days. During debugging of the issue, we decided to inspect the virtual frame buffer. We took a screenshot using:

xwd -root -silent -display :${_d} | xwdtopnm | pnmtojpeg > ss.jpg

The resulting screenshot showed a window asking the user to enter a valid license location. This was obviously an artifact in the desktop implementation of the batch solver. A true headless batch program would have just exited with a message to the user. We have since fixed this issue and are more careful when making any use of this tool–putting in robustness provisions as described before.

Virtual Frame Buffer’s Post-processing Utility

A very useful utility of Xvfb is that we can use it to render post-processing graphics in a headless environment. We can now call tools such as ParaView or LS-PrePost to generate movies and images of scenes they normally render on-screen.

Here is an example that uses Xvfb, OpenFOAM and ParaView to generate a scene image: https://www.rescale.com/resources/software/openfoam/openfoam-motorbike-post/

Here is an example which uses Xvfb, LS-Dyna and LS-PrePost to generate a movie of a crash simulation: https://www.rescale.com/resources/software/ls-dyna/ls-dyna-post-processing/

Some of our users have used this capability to their advantage by creating a visual representation of their data, forgoing the need to download the raw data sets.

Lessons Learned

Since our first use of Xvfb, we’ve learned that its use sometimes leads to adverse and unforeseen side-effects. We have since worked to make all our use of Xvfb as robust as possible to prevent the worst side effect of all, the stalled job. We also have used Xvfb with a great deal of benefit as it allows us to run solvers that do not run in a traditional headless HPC environment. It also allows us to use certain post-processing tools to render images and movies in batch mode. We encourage ISVs who only have desktop implementations of their solvers to create solvers that run in a headless HPC environment–but in doing so keeping in mind what it means to truly run a solver without a display.