What if Airport Security Were More Like On-Premise HPC?

Why high utilization doesn’t work for TSA and why it doesn’t work for HPC

Executive Summary:

- In the case of big compute power, the purchase of large capital assets can create an organizational misalignment of incentives that places the needs of the end user last

- Achieving high utilization rates of on-premise computing is a pyrrhic victory; it creates winners and losers and puts a governor on the pace of innovation

- Information technology leaders with high utilization rates of on-premise compute should establish a cloud bypass for work to encourage a culture of agility, innovation, and “outside-the-stacks” thinking

- When calculating the total cost of ownership (TCO) of on-premise computing, user experience, workflow cycle times, responsiveness to new requirements, and other factors must be considered

Airport Travelers and HPC Users Have the Same Complaints

While standing in line in airport security at LAX recently, travelers behind me began engaging in a familiar sport: wondering if there were better alternatives to the US airport security screening process. As some lines proved to be faster than others, the complaints ranged from line choice to the efficacy of the entire system. Having recently returned from several meetings with future users of cloud computing, the complaints were similar: wait times, capacity limitations, and perceived unfairness in the system.

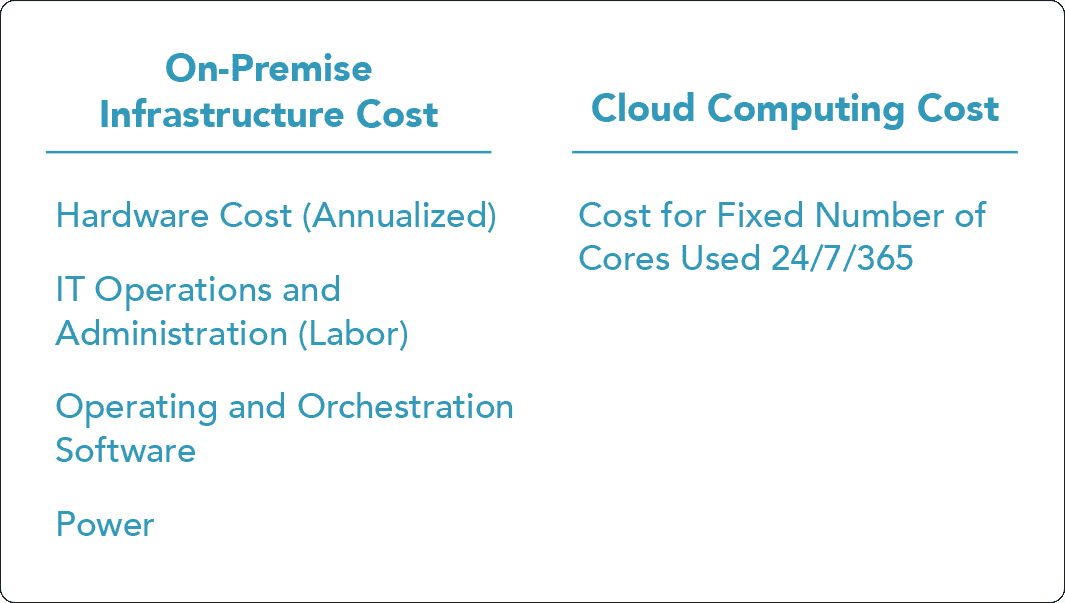

High utilization rates of on-premise computing assets are often cited in a cost-based defense of maintaining a pure on-premise strategy for big compute (HPC) workloads. The argument goes like this: the higher the utilization rate of an on-premise system, the more costly it is to lift and shift those workloads to the cloud. This frequently is a result of a total cost of ownership (TCO) study comparing an incomplete set of variables:

The above TCO comparison is woefully incomplete, but the missing pieces aside, even more visibly apparent is the key assumption underlying cloud computing: 100% utilization. The use of the assumption is understandable. Capital investments require financial justification and, depending on their scale, often detailed NPV analysis. Unfortunately, it is difficult to compare a fixed and capitalized expenditure to a variable and operational expenditure for these analyses. Forecasting opex requires detailed logging of compute usage and assumptions that past behavior can predict future requirements. For simplicity, it is easier to simply assume 100% utilization of cloud computing and move on. However, the organizational implications for 100% utilization of cloud computing versus 100% utilization of on-premise assets are very different. 100% utilization of a constrained on-premise compute asset implies queue times, a constant reevaluation of internal resource priorities, and slow reaction times to new requirements. 100% utilization of a certain portion of the immense cloud has none of these disadvantages.

This brings us back to our TSA story.

A TSA Nightmare

Imagine one day, the TSA agents at a particular airport received a peculiar directive: the taxpayers are extremely sensitive to the purchase of capitalized assets; and, as a result, it is now an agency priority to achieve 95% or greater capacity utilization of the newly installed scanners. What would be the consequences?

First, 95% utilization would require passenger processing through the line at all hours of the night, regardless of the fact that airplanes were only leaving and arriving between 6AM and midnight. Second, every 19 out of 20 passengers that arrived at the security line should expect a queue, regardless of the time they arrived. Third, during peak travel periods, wait times would increase exponentially. Fourth, in the long run, to achieve the targets, the TSA agents would be incentivized to shut down additional security lines and laterally transfer “excess” scanners to other airports. Somewhere in the aftermath is the passenger whose needs have been subordinated to the quest for high utilization rates. The psychology of the passenger changes, also. The passenger begins planning for long queue times, devoting otherwise productive time to gaming a system with limited predictability.

In the case of the purchase of a large, fixed-capacity compute system, the misalignment of incentives begins almost immediately after the purchase of the asset. Finance wants to optimize the return on the asset, putting pressure on Information Technology leaders to use the smallest possible asset at the highest levels of utilization for the longest amount of time. Meanwhile, hardware requirements continue to diverge and evolve outside the walls of the company, artificially constraining the company to decisions made years prior when business conditions were unlikely similar to present day. The very nature of a fixed asset creates winners and losers as workloads from some portions of the company are prioritized over others. Unlike airline travelers, however, engineers, researchers, and data scientists can be given options to bypass the system.

The cloud has inherent advantages relative to its on-premise counterpart. As a result, cloud big compute has earned its seat at the table in any organization that values agility, fast innovation cycles, and new approaches to problems. On-premise resources are inherently capacity-constrained and over time can place psychological governors to how employees think about finding solutions to problems. For example, an engineer may simply assume she has no other option and over-design a part rather than run a design study to understand sensitivity to key parameters. The cloud is not a panacea for all problems that need big compute. However, Information Technology leaders can do their part to encourage a culture of innovation by merely having a capable cloud strategy.

The cloud is more than TSA PreCheck, it is driving up on the tarmac and getting on the plane.

Learn more about the advantages of moving HPC to the cloud by downloading our free white paper: Motivations and IT Roadmap for Cloud HPC